In the fast-paced world of artificial intelligence, the concept of 'scaling up' has become a prominent trend as tech companies strive to enhance their AI systems by utilizing vast amounts of internet data. However, this approach has raised concerns for AI expert Abeba Birhane, who has been a vocal critic of the values and practices within the industry.

Birhane, a senior adviser in AI accountability at the Mozilla Foundation, recently conducted research that revealed a troubling trend in popular AI image-generator tools. She found that the process of scaling up on online data to train these tools has led to the production of racist outputs, particularly targeting Black men.

Originally from Ethiopia and currently residing in Ireland, Birhane's background in cognitive science led her to question the lack of attention given to data quality in AI research. Her work involves auditing large-scale datasets to uncover potential biases and ethical implications.

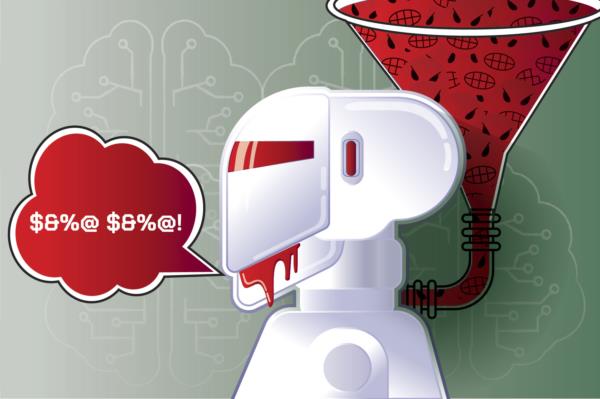

One of the key issues Birhane has identified is the industry's emphasis on 'scaling up' as a measure of success. While many researchers argue that increasing dataset size leads to more accurate models, Birhane's research has shown that this approach can exacerbate existing problems, such as the proliferation of hateful and discriminatory content.

Her studies have revealed that as datasets are scaled up, instances of hateful and aggressive content also increase significantly. Furthermore, biases against darker-skinned individuals, particularly men, have been observed in the labeling process within AI systems.

Despite proposing recommendations to address these issues, Birhane remains skeptical about the willingness of the AI industry to implement meaningful changes. She highlights the need for stronger regulations and public awareness to hold companies accountable for the societal impact of their AI technologies.

As the debate around ethics and accountability in AI continues to evolve, Birhane's work serves as a critical reminder of the importance of addressing biases and ensuring fairness in the development of AI systems.