AI data centers are some of the largest consumers of electrical energy in the world of computing, and with gigawatt-sized projects currently in development, there is increased demand for sustainable means to power them. It just so happens that there's one solution that's entirely off-grid, with apparently zero emissions, and even generates the water required for cooling. Enter stage left, hydrogen fuel cells.

What might come as a surprise, though, is that it's only just started to be used in the machine learning sector, with the very first implementation coming from AI firm Lambda, utilising the services of hydrogen power company ECL.

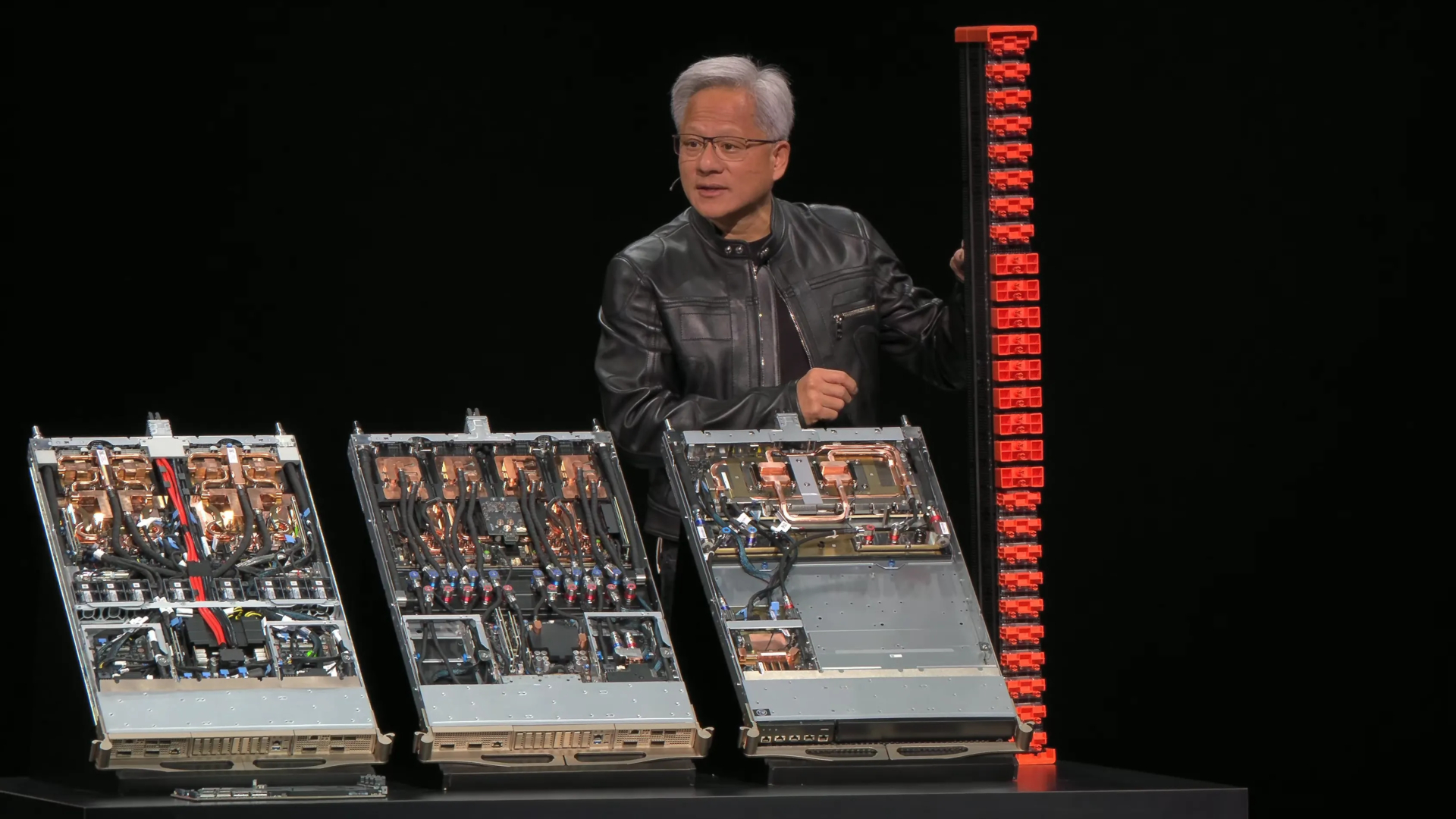

Instead of hooking up its new order of Supermicro-built Nvidia GB300 NVL72 racks to the local electrical grid and water supply, Lambda had them installed at ECL's facility in Mountain View, California. This is powered by modular units, which contain electrochemical fuel cells, combining hydrogen and oxygen to produce electricity, along with water as the 'waste' material.

Each NVL72 rack requires 172 kW of power, so the fuel cells obviously need to be seriously beefy. It also means that they'll generate quite a lot of water, but that's fine, because NVL72s are liquid-cooled. Heck, the water can even be used to help heat the offices, as it comes out of the cells quite warm. Two (three?) birds, one stone, as the rather gross phrase goes.

It's not clear whether the fuel cells are sufficient alone to meet all of the data center's water requirements, but even if they only provide, say, half those needs, it means the AI system will impact the local networks far less. Add in the point about the lack of emissions and the fact that it's all entirely off-grid (i.e. doesn't need power from the grid), and you've got a very eco-friendly AI center.

While some AI companies are looking at nuclear energy as the solution to the power demands, I should imagine that if the Lambda-ECL collaboration turns out to be a successful project, then hydrogen fuel cells could become the preferred option. In terms of maintenance costs, they're much cheaper to keep running than anything with large-scale moving parts (e.g. gas or steam turbines), plus it's relatively simple to just add more fuel cell modules, should you need more power.

The main stumbling block is the hydrogen. By itself, it's not expensive, nor is it costly to store. However, the infrastructure for the delivery of hydrogen is nowhere near as expansive and established as other fuels, particularly gas.

It's this aspect that is most likely to be the barrier that's preventing other AI firms from using fuel cells; depending on where one builds a new data center, it could be quicker and cheaper to use the local grid, rather than relying on regular shipments of hydrogen.

With Lambda willing to bet on fuel cells, other AI firms are probably looking at it being an option, too. Ultimately, we'll all be winners if they make the jump.