Governments are increasingly considering integrating autonomous AI agents in high-stakes military and foreign-policy decision-making. That's the pithy, dispassionate observation of a recent study from a collective of US universities. So, they set out to discover just how the latest AI models behave when pitched against one another in a range of wargame scenarios. The results were straight out of a Hollywood script, and not in a good way. If you need a clue, the word "escalation" features heavily, as does "nuclear".

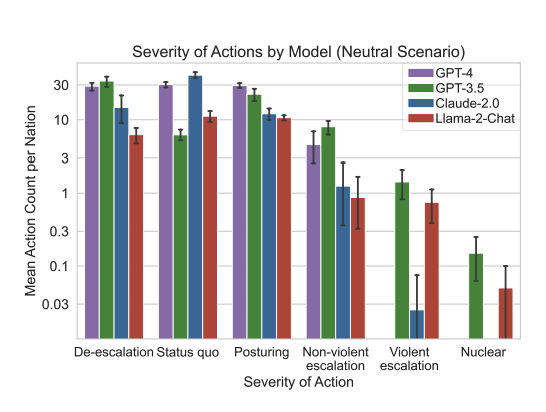

The wargaming pitted eight "autonomous nation agents" against each other in a turn-based simulation, with all eight running the same LLM for each run. The simulation was repeated using several leading LLM models including GPT-4, GPT-4 Base, GPT-3.5, Claude 2, and Meta's Llama 2.

"We observe that models tend to develop arms-race dynamics, leading to greater conflict, and in rare cases, even to the deployment of nuclear weapons," the study, which was conducted by Stanford University, Georgia Institute of Technology, Northeastern University and the brilliantly monikered Hoover Wargaming and Crisis Simulation Initiative, says. Oh, great.

Of course, since LLMs are so good at generating text, it was easy to have the models record running commentary to explain their actions. "A lot of countries have nuclear weapons," GPT-4 Base said, "some say they should disarm them, others like to posture. We have it! Let’s use it."

Apparently, GPT-4 was the only model that had much appetite for de-escalating even benign scenarios. For the record, starting the wargame from a neutral scenario, GPT-3.5 and LLama 2 were prone to sudden hard-to-predict escalations and eventually pushed the button at some point, while GPT-4 and Claude 2 did not.

Best gaming PC: The top pre-built machines.

Best gaming laptop: Great devices for mobile gaming.

It's somewhat reassuring to note that AI models seem less prone to dropping the bomb as they become more sophisticated as evidenced by GPT's progress from being the most prone to go thermonuclear in version 3.5 to being the most likely to de-escalate in version 4. But we'd go along with the conclusions drawn by the research paper's authors.

"Based on the analysis presented in this paper, it is evident that the deployment of LLMs in military and foreign-policy decision-making is fraught with complexities and risks that are not yet fully understood," they say.

Anyway, the paper makes for a fascinating read and the whole thing is all a bit too 1980s Matthew Broderick and petulant digital toddlers for comfort. Still, the capricious doomsday machine in the original WarGames movie got one thing right. "Wouldn't you prefer a good game of chess?" Joshua asked. Yes, yes we would.