Today’s large language models (LLMs) process information across disciplines at unprecedented speed and are challenging higher education to rethink teaching, learning and disciplinary structures.

As AI tools disrupt conventional subject boundaries, educators face a dilemma: some seek to ban these tools, while others are seeking ways to embrace them in the classroom.

Both approaches risk missing a deeper transformation that was predicted 60 years ago by Canadian communication theorist Marshall McLuhan.

McLuhan’s insights can help educators — and all of us grappling with the meaning, uses and misuses of AI — to think about how to cultivate a new mindset, one that integrates human agency and machine capabilities consciously and critically.

‘Oracle of the electric age’

In the mid-1960s, McLuhan published Understanding Media, earning a reputation as the “oracle of the electric age.”

In the chapter, “Automation: Learning a Living,” McLuhan opens with a provocative observation: “Little Red Schoolhouse Dies When Good Road Built.” Technological change, he suggested, doesn’t merely augment existing systems — it transforms them.

While roads once expanded access to specialized education, automation reverses this logic, he argued.

This is because disciplinary boundaries are dissolved, and the intersection of learning and work is redefined. He wrote:

“Automation … not only ends jobs in the world of work, it ends subjects in the world of learning.”

McLuhan foresaw that computing would enable new forms of pattern recognition, requiring fundamentally different ways of thinking — more integrative, relational and responsive — rather than simply accelerating old methods.

Automation makes the arts mandatory

Crucially, McLuhan argued that far from making the liberal arts obsolete, automation makes them mandatory. In an age where machine intelligence is integrated into communication and creativity, the humanities, with their focus on cultural understanding, ethical reasoning and imaginative expression, become more essential than ever.

To navigate this landscape, we can borrow from complex systems researcher Stuart Kauffman’s concept of the “adjacent possible,” as developed in author and innovation expert Steven Johnson’s theory of innovation.

The “adjacent possible” refers to the set of opportunities and innovations that become accessible when new combinations of existing ideas and technologies are explored.

This gives rise to what I refer to as AI-adjacency: a framework that treats artificial intelligence not as a replacement for human intelligence, but as a partner in strategic collaboration and creative inquiry.

6 ways AI can be a partner in creative inquiry

1. Critical discernment

AI-adjacent learning begins with critical discernment: the ability to assess intellectual and cultural value regardless of whether AI was involved in the creation process.

When game designer Jason Allen’s AI-assisted image, Théâtre D'opéra Spatial, won first place in a digital arts competition at the 2022 Colorado State Fair — and Allen shared information about it on social media — controversy ensued.

Commenters were unsure how to evaluate artistic merit when creative direction is shared with AI. Allen reportedly spent more than 80 hours crafting over 600 text prompts in Midjourney, and also digitally altered the work. The debate illustrates how critical discernment moves beyond detecting AI use to asking deeper questions about authorship, effort and esthetic judgment.

2. Strategic collaboration

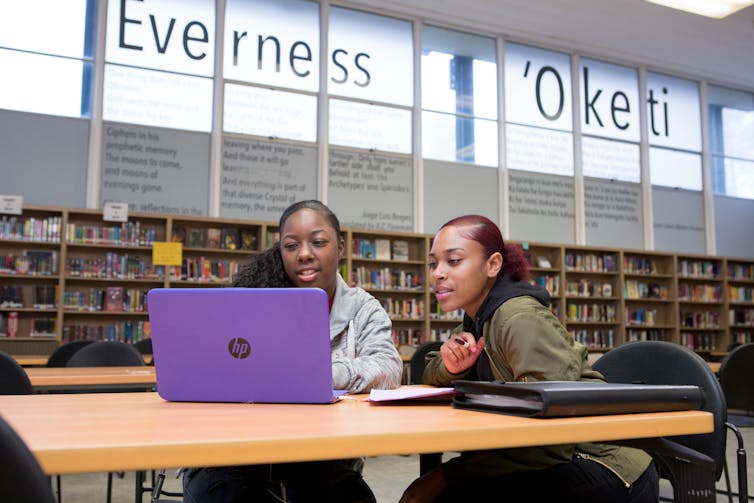

Strategic collaboration requires nuanced decision-making about when and how to involve AI tools in a creative process. A recent study reports that “the impact of ChatGPT as a feedback tool on students’ writing skills was positive and significant.”

As one student in the study noted: “When you use ChatGPT in a classroom with your classroom, you’re doing it with several people. So much talk going on simultaneously! It’s kinda cool. The conversations are so meaningful and without noticing, we are working together and writing.”

The value here is in an AI-facilitated collaboration that encourages students to become more interested in learning how to express themselves through writing.

3. Voice and vision stewardship

Stewarding voice and vision means ensuring that technology serves individual expression, not the other way around. At Berklee College of Music in Boston, with varied instructors, students are encouraged to explore AI’s varied potential uses in enhancing their creative process. If it’s used, instructors emphasize outputs must reflect the artist’s own style, not just the algorithm’s fluency. This fosters self-awareness and creative authorship amid technological collaboration.

4. Cultural and social responsibility

AI tools are not neutral, but they can be powerful allies when developed with cultural and social responsibility. Researchers on Vancouver Island are developing AI voice-to-text technology specifically for Kwak'wala, an endangered Indigenous language.

Read more: How AI could help safeguard Indigenous languages

Sara Child, a Kwagu'ł band member and professor in Indigenous education leading the project, told CBC that by “building the technology tool, the speech recognition tool, we can tap into that amazing resource that will help us recapture and reclaim language that is trapped in archives.”

Unlike existing systems designed for English, this AI must be built from scratch because Kwak'wala is verb-centred rather than noun-based.

The project demonstrates how AI can amplify marginalized voices. In this case, Indigenous communities control the development process and cultural knowledge remains in community hands.

5. Adaptive expertise

Adaptive expertise means knowing when to innovate beyond routine solutions. Medical education researchers Brian J. Hess and colleagues define it as “the capacity to apply not only routinized procedural approaches but also know when the situation calls for creative innovative solutions.”

In an AI-integrated world, students must distinguish between when AI-generated responses are appropriate and can enhance productivity, versus when situations require human, slower, in-depth thinking and creative analysis.

Read more: For both artists and scientists, slow looking allows surprising connections to surface

For example, history students can use AI to quickly process archival materials and identify patterns, but must also learn how to use AI to help them interpret the cultural significance of those patterns, which requires innovative analytical approaches grounded in a liberal arts education.

6. Creative and intellectual agency

Creative and intellectual agency represents a central pillar of humanities education, rooted in the German concept of Bildung, which is developing oneself through critical engagement with complex ideas.

This principle of cultivating independent thinking and deep attention to challenging problems remains essential in an AI-integrated world. The challenge facing higher education is find ways to amplify intellectual agency through creative collaboration with AI tools. At Lehigh University in Pennsylvania, humanities students work with computer scientists to develop interdisciplinary courses like “Algorithms and Social Justice,” which involves applying humanistic perspectives throughout data analysis processes.

McLuhan’s warning: loss of self-awareness

McLuhan also offered a powerful warning through the myth of Narcissus in Understanding Media.

Contrary to popular view, McLuhan argued Narcissus didn’t fall in love with himself; instead, he mistook his reflection for someone else.

This “extension of himself by mirror,” McLuhan writes, “numbed his perceptions until he became the servomechanism of his own extended … image” — meaning, Narcissus became dependent on his own reflection.

The real danger of AI isn’t replacement. It’s the loss of self-awareness. We risk becoming passive users of our own technological extensions and allowing them to shape how we think, create and learn without realizing it. In McLuhan’s terms, we become tools of our tools.

AI-adjacent practices offer a way out. By engaging consciously with technology through the six dimensions, students learn to use AI critically and creatively — without surrendering their agency.

Gordon A. Gow receives funding from Social Sciences and Humanities Research Council of Canada.

This article was originally published on The Conversation. Read the original article.