Rarely are things just as they seem, and the world of quantum computing lends itself better than most to that description. Described as a fundamental shift in our processing capabilities, quantum computing's development has accelerated incredibly in the past few years. Yet according to a research paper published in the journal of the Association for Computing Machinery, relevant quantum computing (the one that's usually referred to as running circles around even the most powerful classical computers) still requires groundbreaking discoveries in a number of areas before it can dethrone a mere graphics card.

The most surprising element in the paper is the conclusion that a number of applications will remain better suited for classical computing (rather than quantum computing) for longer than previously thought. The researchers say this is true even for quantum systems running across more than a million physical qubits, whose performance the team simulated as part of their research.

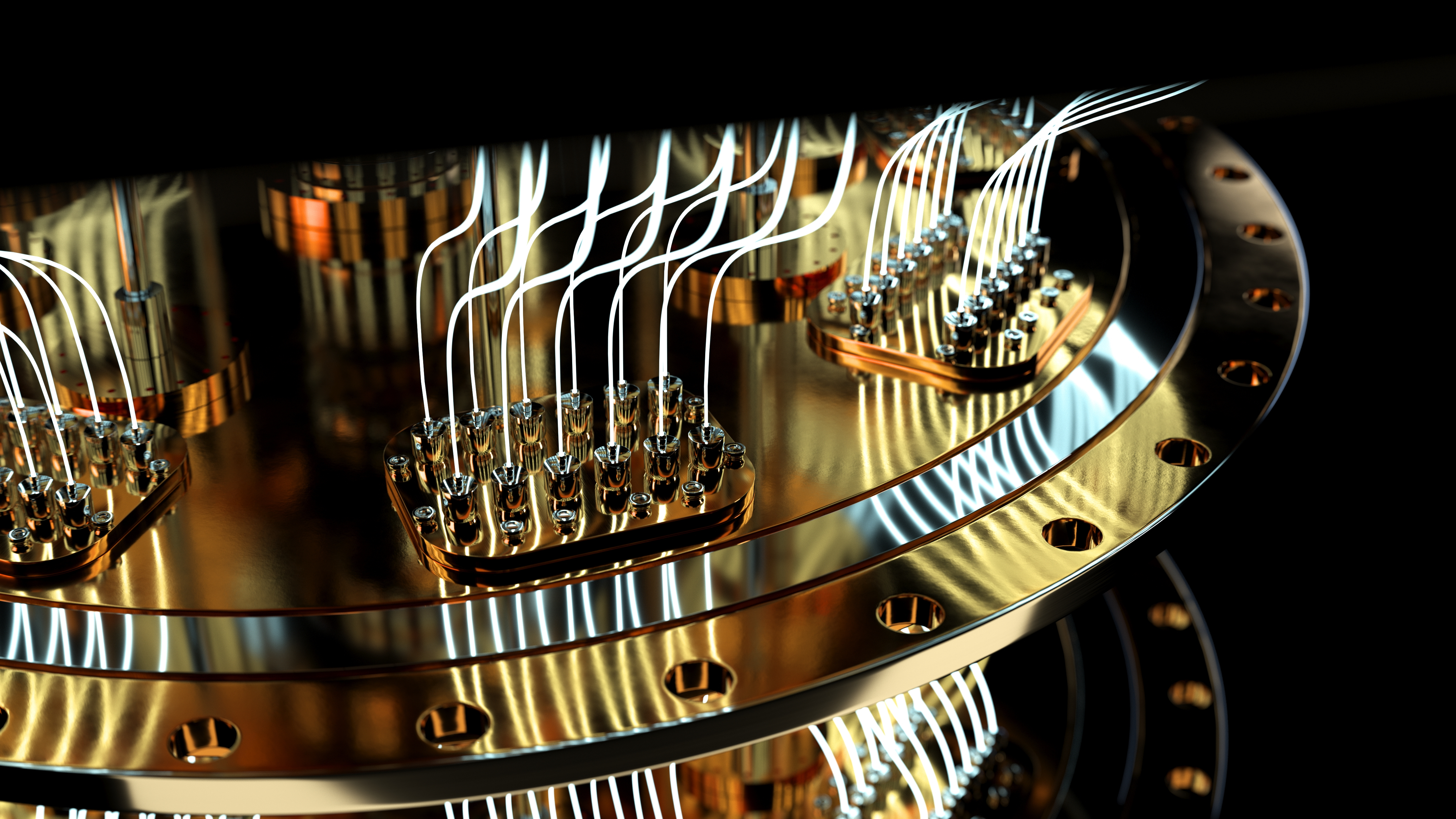

Considering how today's top system, IBM's Osprey, still "only" packs in 433 qubits (with an IBM-promised 4,158-qubit system launch for 2025), the timescale towards a million qubits extends further ahead than expected.

The problem, say the researchers, is not with the applications or workloads themselves — drug discovery, materials sciences, scheduling, and optimization problems in general are still very much in quantum computing's crosshairs. The issue is with the quantum computing systems themselves — their architectures, and their current and future inability to intake the egregious amounts of data some of these applications require before a solution is even found. It's a simple I/O problem, not unlike the one we all knew from before NVMe SSDs became the norm, when HDDs bottlenecked CPUs and GPUs left and right: data can only be fed so quickly.

Yet how much data is sent, how fast it reaches its destination, and how long it takes to process are all elements of the same equation. In this case, the equation is for quantum advantage — the moment where quantum computers offer performance that's beyond anything possible for classical systems. And it seems that in workloads that require the processing of large datasets, quantum computers will have to watch as GPUs such as Nvidia's A100 run by — likely for a long, long while.

Quantum computing might have to make do with solving big compute problems on small data, while classical will have the unenviable task of processing the "big data" problems — a hybrid approach to quantum computing that's been gaining ground for the last few years.

According to a blog post by Microsoft's Matthias Troyer, one of the researchers involved in the study, this means that workloads such as drug design and protein folding, as well as weather and climate prediction would be better suited for classical systems after all, while chemistry and material science perfectly fit the bill for the "big compute, small data" philosophy.

While this may feel like an ice bucket challenge flop for the hopes of quantum computing, Troyer was quick to emphasize that that isn't the case: "If quantum computers only benefited chemistry and material science, that would be enough. Many problems facing the world today boil down to chemistry and material science problems," he said. "Better and more efficient electric vehicles rely on finding better battery chemistries. More effective and targeted cancer drugs rely on computational biochemistry."

But there's another element to the researchers thesis, one that's harder to ignore: it seems that current quantum computing algorithms would be insufficient, by themselves, to guarantee the desired "quantum advantage" result. Rather than the systems engineering complexity of a quantum computer, here it's a simple performance problem: quantum algorithms in general just don't provide enough of an acceleration. Grover's algorithm, for instance, offers a quadratic speedup over classical algorithms; but according to the researchers, that's not nearly enough.

"These considerations help with separating hype from practicality in the search for quantum applications and can guide algorithmic developments," the paper reads. "Our analysis shows it is necessary for the community to focus on super-quadratic speeds, ideally exponential speedups, and one needs to carefully consider I/O bottlenecks."

So, yes, it's still a long road toward quantum computing. Yet the IBMs and Microsofts of the world will steadily carry on their research to enable it. Many of the issues facing quantum computing today are the same we faced in developing classical hardware — the CPUs, GPUs, and architectures of today just had a much earlier and more impactful start. But they still had to undergo the same design and performance iterations as quantum computing eventually will, within its own brave new timeframe. The fact that the paper was penned by scientists with Microsoft, Amazon Web Services (AWS) and the Scalable Parallel Computing Laboratory in Zurich — all parties with vested interests into the development and success of quantum computing — just makes that goal all the more likely.