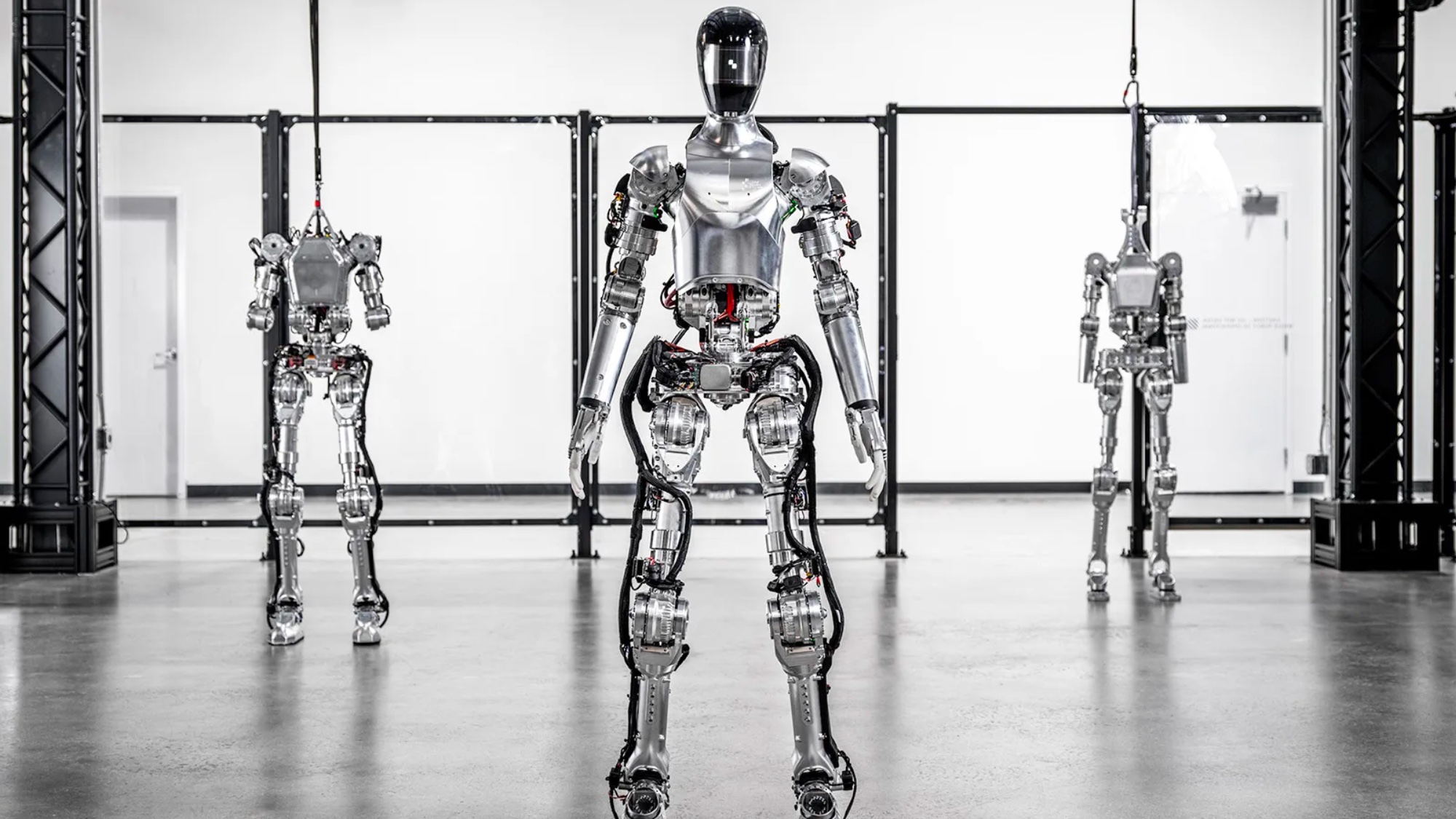

Microsoft and OpenAI are in talks to take a financial stake in humanoid robotics startup Figure that could one day see 'bots capable of doing dangerous jobs unsafe for humans.

Bloomberg first reported the potential investment that could see $500 million raised by a range of investors. This would value Figure at $1.9 billion or higher depending on how much is raised.

The deal isn't final and nobody involved has confirmed it is happening, the reports have come from anonymous sources close to the plan. It does present a logical investment step for OpenAI, which has long explored the idea of robotics and large language models.

During a recent episode of Bill Gates’ podcast, OpenAI CEO Sam Altman revealed that the company is already and would continue to invest in robotics companies.

Does this mean robots powered by ChatGPT?

While it doesn’t specifically mean there will be robots that have ChatGPT in their brain, the concept of putting a large language model like GPT-4 (that powers ChatGPT) as the control mechanism for a robot isn’t a new one.

We’ve already seen some research experiments where engineers have used GPT-4 to allow robots to create poses in response to a voice prompt, and Google is working on integrating its own large language model technology into robots.

During CES 2024 we also saw some toy robots using GPT-4 to provide natural language responses and devices like catflaps using AI vision models like GPT-4V to spot mice.

What has Sam Altman said on robotics?

During his chat with Gates, Altman also revealed the company once had a robotics division but wanted to focus on the mind first. He said: "We realized that we needed to first master intelligence and cognition before adapting to physical forms.”

Once robots are capable of thinking for themselves and if the hardware improves enough to take advantage of this mental capacity “it could rapidly transform the job market, particularly in blue-collar fields,” Altman predicted.

The original prediction for AI was that it would take those blue-collar jobs first, automating repetitive tasks and leaving humans out cold.

In reality it seems to be happening the other way with Altman explaining that ten years ago everyone thought it would be blue-collar work first, then white-collar work and that it would never impact creative roles.

“Obviously, it’s gone exactly the other direction,” he told Gates. “If you’re having a robot move heavy machinery around, you’d better be really precise with that. I think this is just a case of you’ve got to follow where technology goes.”

Altman added that what they are now starting to see is that OpenAI models are being used by hardware companies, utilizing its language understanding to “do amazing things with a robot.”

How soon will we see these robots?

While it would be nice to order up a robot helper to do your household chores, cook you dinner and even watch the kids, it will be some time before the machines reach that level of ability and foundation AI models are just a stop-gap.

Dr Richard French, senior systems scientist at the University of Sheffield told Tom’s Guide that while foundation AI models can deliver semi-useful human-robot interaction, it could make things harder as you'd need to unpick the part of the model not needed for that robot.

The type of AI behind ChatGPT can “speed up generic programming and awareness but also deliver semi-useful Human Robot Interaction (HMI),” he explained. “This is things like Natural Language Processing, Vision, Text to image generation.”

Suppose your computing power and memory storage are up to the job, this is the key driver to being able to achieve partial intelligence — but it is still likely to need another couple of decades before realization.

Dr Richard French

The downside is that a foundation AI model can be a blunt instrument and offer more than is needed for the robot’s assigned task.

“From a robot building point of view it will cause a lot of fun undoing the Foundation AI when you realize that for any application for a robot is a highly specific use case and you have either overtrained or undertrained the mode," he said.

Dr Green predicts human-level robotics like those predicted by Sam Altman will require the development of Neuromorphic Computing — where the computer is modeled after systems in the human brain.

Dr Robert Johns, an engineer and data analyst at Hackr agrees that foundation models could give robots a boost in perception, decision making and other abilities.

"However, robots need very specialized knowledge to perform particular tasks," he told Tom's Guide. "Like a bot that makes coffee needs different skills than one vacuuming your house! Because of this, exclusive foundation models tailored to specific robot applications would likely work better than generalized ones," such as GPT-4.

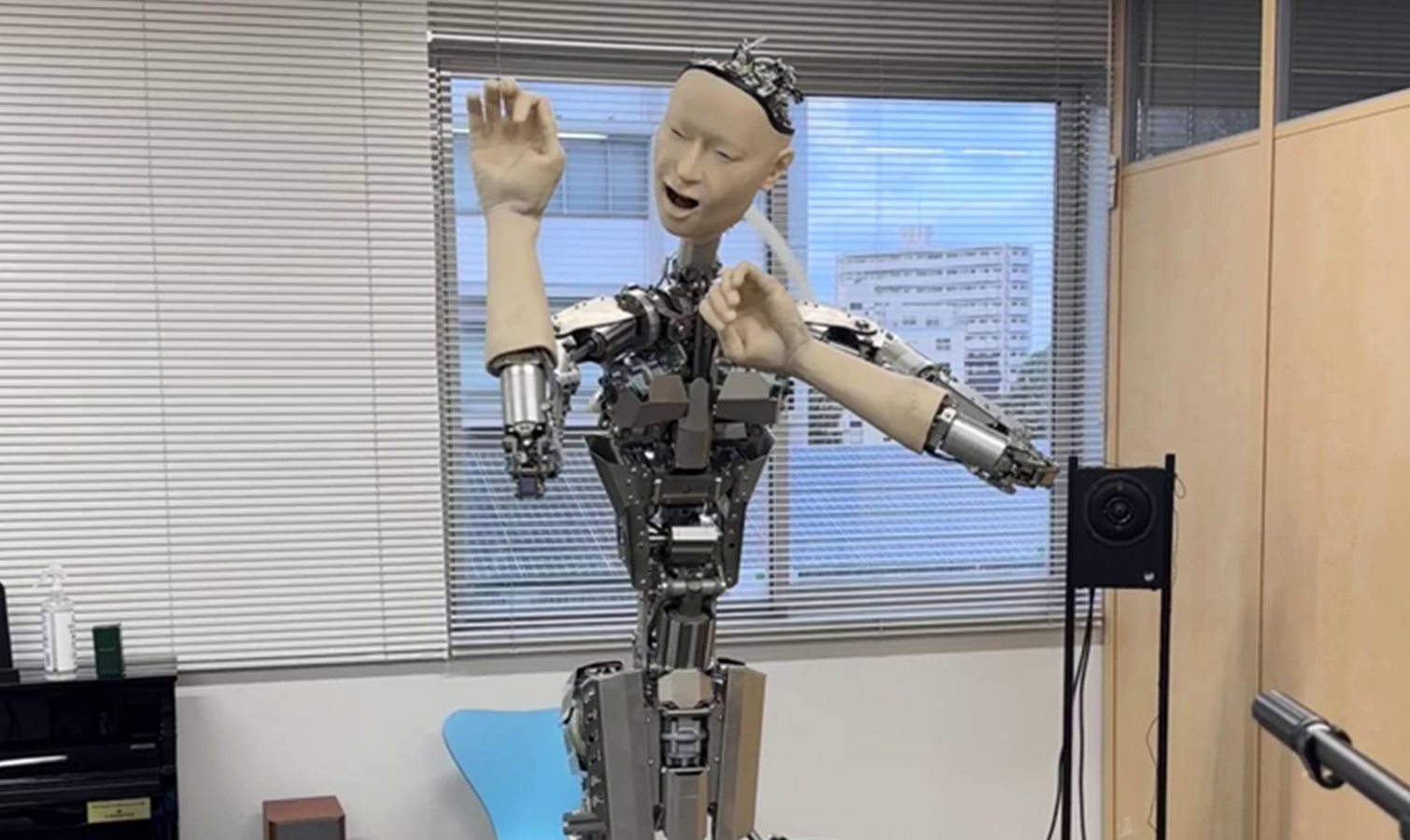

So while AI might be growing at a pace, while the promise of artificial general intelligence is on the horizon and while robots are starting to walk, talk and dance — getting machines that can do all of the above, on their own and with a degree of autonomy may take a little longer.