What you need to know

- Meta plans to label AI-generated images from other companies, increasing transparency on platforms like Facebook, Instagram, and Threads.

- It is collaborating with industry partners to create tools for spotting AI-made content, focusing on invisible markers like watermarks and metadata.

- Meta plans to start applying labels in various languages, particularly as important elections occur worldwide in the coming months.

Meta says it's going to slap labels on AI-created images from other companies, like OpenAI and Google.

Nick Clegg, Meta's global affairs president, wrote in a blog post that the company wants to be more transparent on platforms like Facebook, Instagram, and Threads when it comes to generative AI. The plan is to let users know when the pictures they're seeing are AI-generated.

With many countries gearing up for elections in 2024, everyone has their eyes on how Meta will handle fake news on its platforms.

As generative AI gets easier to use, fake pictures are popping up on social media, pretending to be real. Meta plans to tackle it by spotting and tagging AI images on its platforms, even if they're made by other companies.

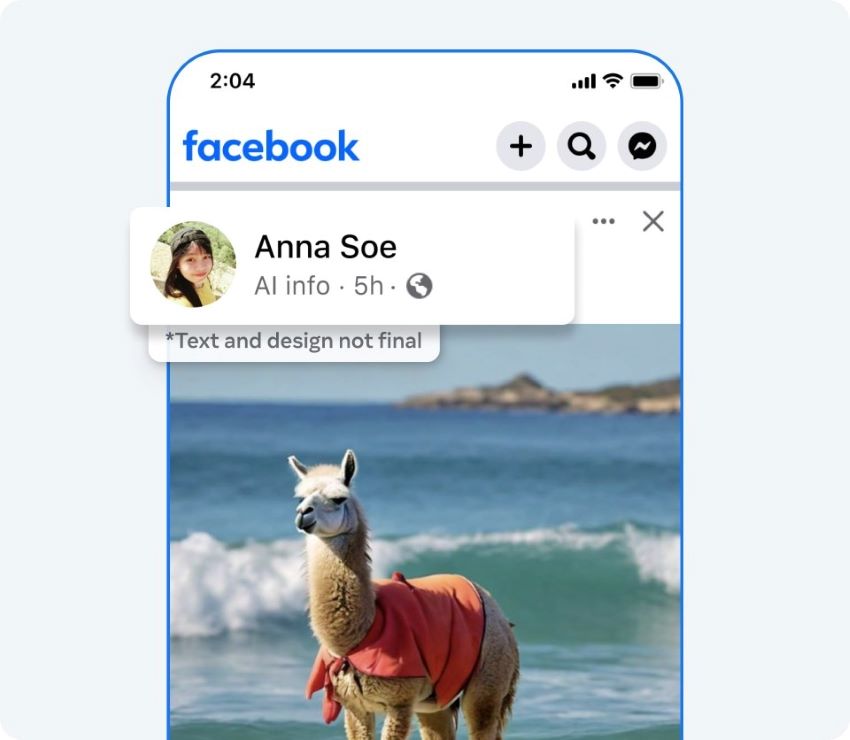

Meta already slaps "Imagined with AI" labels on its own AI-made photorealistic images, and now it wants to do it for images from other AI tools too. The trick with Meta's AI pictures is that they have visible markers, hidden watermarks, and metadata in the files to shout out that they're AI creations.

According to Clegg, Meta is teaming up with industry partners to create tools that can spot AI-made content using "invisible markers" like watermarks and metadata. The goal is to nail these markers at scale so that when images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock are shared on a Meta platform, they get the proper label.

"We’re building this capability now, and in the coming months we’ll start applying labels in all languages supported by each app," Clegg writes. "We’re taking this approach through the next year, during which a number of important elections are taking place around the world."

As for AI-made videos and audio, Clegg mentions that companies haven't thrown in as many hidden signals as they have with images. So, Meta can't quite catch video and audio churned out by other AI tools just yet.

That said, Meta will introduce a feature that lets users disclose when they share AI-made video or audio. If you've got realistic video or audio that's been digitally whipped up or tweaked, you'll be required to use this disclosure tool. Meta might levy some penalties if you forget to do so.

According to Clegg, if you're messing with images, videos, or audio in a way that could seriously fool people on important matters, Meta will attach a more prominent label to it.

To avoid users sneaking around and removing those markers, Meta's FAIR AI research lab developed a system that bakes the watermarking mechanism into the image-generation process for certain generators, which comes in handy for open source models to keep the watermarking from being switched off.

Meta says it's sticking to its collaboration with partners and keeping the discussion with governments as generative AI becomes more common.