Leading artificial intelligence researchers have boycotted South Korea’s top university after it teamed up with a defence company to develop “killer robots” for military use.

An open letter sent to the Korea Advanced Institute of Science and Technology (KAIST) stated that the 57 signatories from nearly 30 different countries would no longer visit or collaborate with the university until autonomous weapons were no longer developed at the institute.

“It is regrettable that a prestigious institution like KAIST looks to accelerate the arms race to develop such weapons,” the letter states.

“They have the potential to be weapons of terror. Despots and terrorists could use them against innocent populations, removing any ethical restraints. This Pandora’s box will be hard to close if it is opened.”

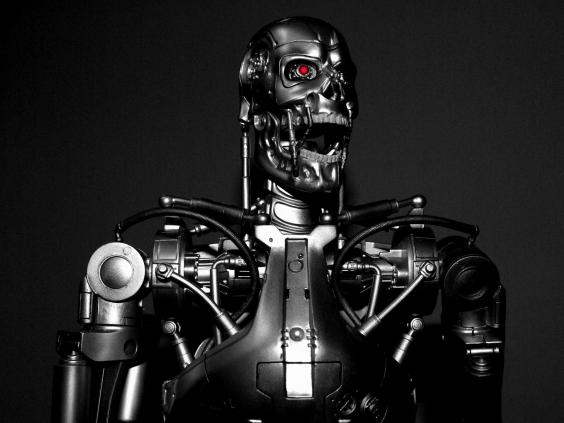

The extent of this threat has been likened by some security experts to that of Skynet, a fictional artificial intelligence system that first appeared in the 1984 film The Terminator. After becoming self-aware, Skynet set out to wipe out humanity using militarized robots, drones and war machines.

“If we combine powerful burgeoning AI technology with insecure robots, the Skynet scenario of the famous Terminator films all of a sudden seems not nearly as far-fetched as it once did,” Lucas Apa, a senior security consultant from the cybersecurity firm IOActive, told The Independent .

Apa said robots were at risk to hacking and malfunctioning, citing an incident at a US factory in 2016 that resulted in the death of one of the workers .

“Similar to other technologies, we’ve found robot technology to be insecure in a number of ways,” Apa said. “It is concerning that we are already moving towards offensive military capabilities when the security of these systems are shaky at best. If robot ecosystems continue to be vulnerable to hacking, robots could soon end up hurting instead of helping us.”

KAIST president Sung-Chul Shin responded to the open letter, claiming that the university had "no intention" of developing lethal autonomous weapons.

"I reaffirm once again that KAIST will not conduct any research activities counter to human dignity including autonomous weapons lacking meaningful human control," he said.

It is not the first time that AI academics have warned of the dangers posed by weaponized robots, with a similar letter sent to Canadian Prime Minister Justin Trudeau last year.

Other notable scientific figures, including the physicist Stephen Hawking, have even gone as far as to say that AI has the potential to destroy civilization.

“Computers can, in theory, emulate human intelligence, and exceed it,” Hawking said last year. “AI could be the worst event in the history of our civilization. It brings dangers, like powerful autonomous weapons, or new ways for the few to oppress the many.”